Outliers Processing

Some data analysts do not grant any attention to outliers, and they may have first heard this term while reading this article. Outliers have a significant impact on many statistical indicators, and the methods of handling and processing them are related to many factors, some of which are simple, and some are more complex and related to the type of statistical indicator, as the data analyst must know the classification of the Smooth Parameters and the that’s not, and this indicates the degree to which it is affected by the outliers.

For example, the mean is considered one of the best indicators/coefficients of central tendency, but it is extremely affectable by outliers compared to the median, knowing that the median is not considered an accurate coefficient compared to the mean.

Within the following lines, I will try to tackle an important aspect related to the outliers, which is the simplest, it’s the methods of processing outliers:

Methods of processing outliers:

1. Revision of the source: we revise the source in order to check the value, if there is an entry mistake, it is corrected, such as writing the age for a study about children as 22 by mistake instead of 2, so, we simply discover that it is an entry mistake and correct it.

2. Logical processing of outliers: Mistakes of outliers can be discovered through logical processing, simply, when studying the labor force, for example, the data of a person who is 7 years old are deleted because he is not classified as a labor force.

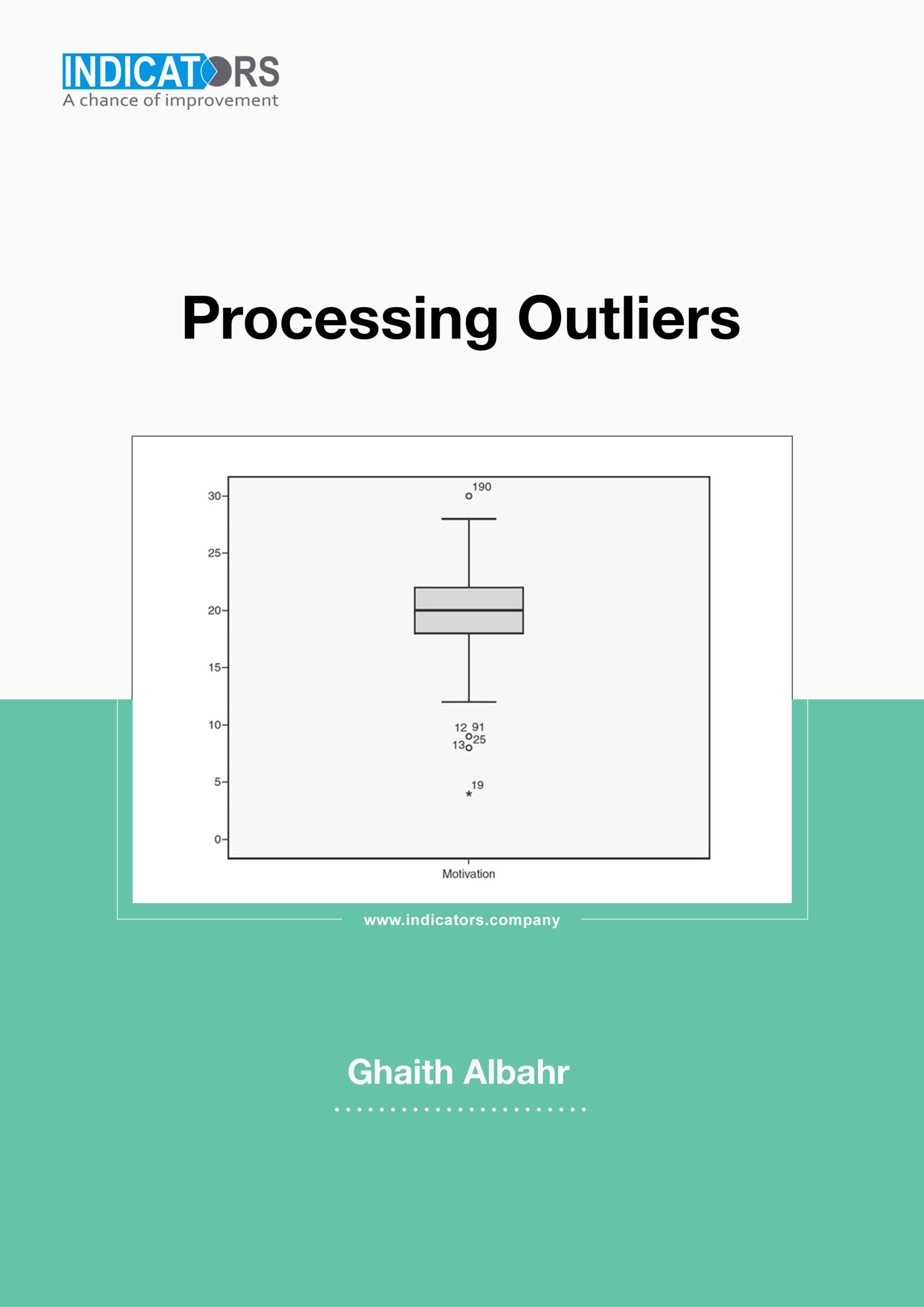

3. Distinguishing between what to keep and what to delete: This process is considered very exhausting, as there are no precise criteria for accepting or rejecting outliers. In this regard, SPSS program offers a useful feature, which is classifying outliers into two types, Outliers (which are between the first/third quartile and one and a half of the inter-quartile range), and Extreme values (which are between one and a half to three times the inter-quartile range), in other words, data far from the center of the data and data extremely far from it, in this case this classification can be adopted by accepting outliers and deleting extreme values.

4. Replacing the outliers that have been deleted: The last and most sensitive step is the decision to deal with the deleted outliers, whether to keep them deleted (as missing values) or replace them, the challenge begins with the decision to replace them, as leaving them as missing values entails consequences and challenges, similarly, replacing them also entails consequences and challenges. The decision of replacing deleted outliers is followed by the appropriate methodology for replacement, as the process of replacing missing values is also complicated and has various methodologies and options, each of these methodologies will have an impact in a way on the results of data analysis (I will talk about replacing missing values in another post).

It is not simple to summarize the methodologies for dealing with outliers within these few lines, as deleting outliers puts us in front of other options; shall we leave it as a missing value or replace it with alternative values? Also, when we delete outliers and reanalyze the data, we will find that new outliers have appeared, these values were not considered outliers considering the database before it was modified (before deleting the outliers in the first stage), therefore, I recommend Data Analysts to study more about this topic, considering the extent of studying they need based on the volume and sensitivity of the data.

By:

Ghaith AlBahr (Mustafa Deniz): CEO of INDICATORS

Some data analysts do not grant any attention to outliers, and they may have first heard this term while reading this article. Outliers have a significant impact on many statistical indicators, and the methods of handling and processing them are related to many factors, some of which are simple, and some are more complex and related to the type of statistical indicator, as the data analyst must know the classification of the Smooth Parameters and the that’s not, and this indicates the degree to which it is affected by the outliers.

For example, the mean is considered one of the best indicators/coefficients of central tendency, but it is extremely affectable by outliers compared to the median, knowing that the median is not considered an accurate coefficient compared to the mean.

Within the following lines, I will try to tackle an important aspect related to the outliers, which is the simplest, it’s the methods of processing outliers:

Methods of processing outliers:

Revision of the source: we revise the source in order to check the value, if there is an entry mistake, it is corrected, such as writing the age for a study about children as 22 by mistake instead of 2, so, we simply discover that it is an entry mistake and correct it.

Logical processing of outliers: Mistakes of outliers can be discovered through logical processing, simply, when studying the labor force, for example, the data of a person who is 7 years old are deleted because he is not classified as a labor force.

Distinguishing between what to keep and what to delete: This process is considered very exhausting, as there are no precise criteria for accepting or rejecting outliers. In this regard, SPSS program offers a useful feature, which is classifying outliers into two types, Outliers (which are between the first/third quartile and one and a half of the inter-quartile range), and Extreme values (which are between one and a half to three times the inter-quartile range), in other words, data far from the center of the data and data extremely far from it, in this case this classification can be adopted by accepting outliers and deleting extreme values.

Replacing the outliers that have been deleted: The last and most sensitive step is the decision to deal with the deleted outliers, whether to keep them deleted (as missing values) or replace them, the challenge begins with the decision to replace them, as leaving them as missing values entails consequences and challenges, similarly, replacing them also entails consequences and challenges. The decision of replacing deleted outliers is followed by the appropriate methodology for replacement, as the process of replacing missing values is also complicated and has various methodologies and options, each of these methodologies will have an impact in a way on the results of data analysis (I will talk about replacing missing values in another post).

It is not simple to summarize the methodologies for dealing with outliers within these few lines, as deleting outliers puts us in front of other options; shall we leave it as a missing value or replace it with alternative values? Also, when we delete outliers and reanalyze the data, we will find that new outliers have appeared, these values were not considered outliers considering the database before it was modified (before deleting the outliers in the first stage), therefore, I recommend Data Analysts to study more about this topic, considering the extent of studying they need based on the volume and sensitivity of the data.

By:

Ghaith AlBahr (Mustafa Deniz): CEO of INDICATORS

Reviews

There are no reviews yet.